Power BI has revolutionized how businesses handle data analysis and visualization, but everything starts with one fundamental step: creating a solid dataset. Whether you’re a beginner trying to make sense of your company’s sales data or an experienced analyst building complex business intelligence solutions, understanding how to properly create and manage datasets in Power BI is absolutely crucial.

The truth is, most Power BI projects fail not because of poor visualization choices or inadequate reporting, but because the underlying dataset wasn’t structured correctly from the beginning. A well-designed dataset is the foundation that makes everything else possible, from simple charts to complex analytical dashboards.

This comprehensive guide walks you through everything you need to know about creating datasets in Power BI, from basic concepts to advanced techniques that will make your data analysis more efficient and your insights more reliable.

Understanding Power BI Datasets: The Foundation of Everything

Before diving into the how-to, let’s clarify what a Power BI dataset actually is. Think of a dataset as a structured collection of data that’s been prepared, cleaned, and optimized for analysis and visualization. It’s not just raw data thrown together; it’s data that’s been thoughtfully organized with relationships, calculations, and business logic built in.

A Power BI dataset typically includes:

- Tables containing your raw data from various sources

- Relationships between different tables

- Calculated columns and measures using DAX (Data Analysis Expressions)

- Data types and formatting rules

- Security and access permissions

The power of Power BI becomes evident when you realize that once you create a robust dataset, multiple reports and dashboards can be built on top of it, all sharing the same underlying data structure and business logic.

Different Ways to Create Datasets in Power BI

Method 1: Using Power BI Desktop (Most Common Approach)

Power BI Desktop is where most dataset creation happens, and for good reason. It provides the most comprehensive set of tools for data transformation, modeling, and preparation.

Step-by-Step Process:

Getting Your Data Connected: Start by opening Power BI Desktop and clicking “Get Data” from the Home ribbon. Power BI supports an impressive range of data sources, including Excel files, SQL databases, web APIs, cloud services like SharePoint and Google Analytics, and even social media platforms.

Choose your data source and establish the connection. For this example, let’s say you’re working with an Excel file containing sales data. Navigate to your file, select it, and Power BI will show you a preview of available tables and sheets.

Initial Data Preview and Selection: The Navigator window shows you all available tables in your data source. This is your first opportunity to be selective about what data you actually need. Don’t automatically import everything; only select tables that are relevant to your analysis goals.

Check the boxes next to the tables you want to include, and you’ll see a preview of the data structure. Pay attention to column names, data types, and the general quality of the data at this stage.

Transform Your Data with Power Query Editor: Instead of clicking “Load” immediately, click “Transform Data” to open the Power Query Editor. This is where the real magic happens in terms of data preparation.

The Power Query Editor provides numerous transformation options:

Data Cleaning Operations:

- Remove duplicate rows that could skew your analysis

- Filter out irrelevant or test data

- Handle missing values by replacing them with appropriate defaults or removing incomplete records

- Standardize text formatting (proper case, trim whitespace, etc.)

Column Management:

- Rename columns to more descriptive, business-friendly names

- Remove unnecessary columns that won’t be used in the analysis

- Split columns containing multiple pieces of information

- Merge columns when you need to combine related data

Data Type Optimization:

- Ensure dates are recognized as date/time data types

- Convert text that represents numbers into proper numeric data types

- Set up proper decimal formatting for currency and percentage values

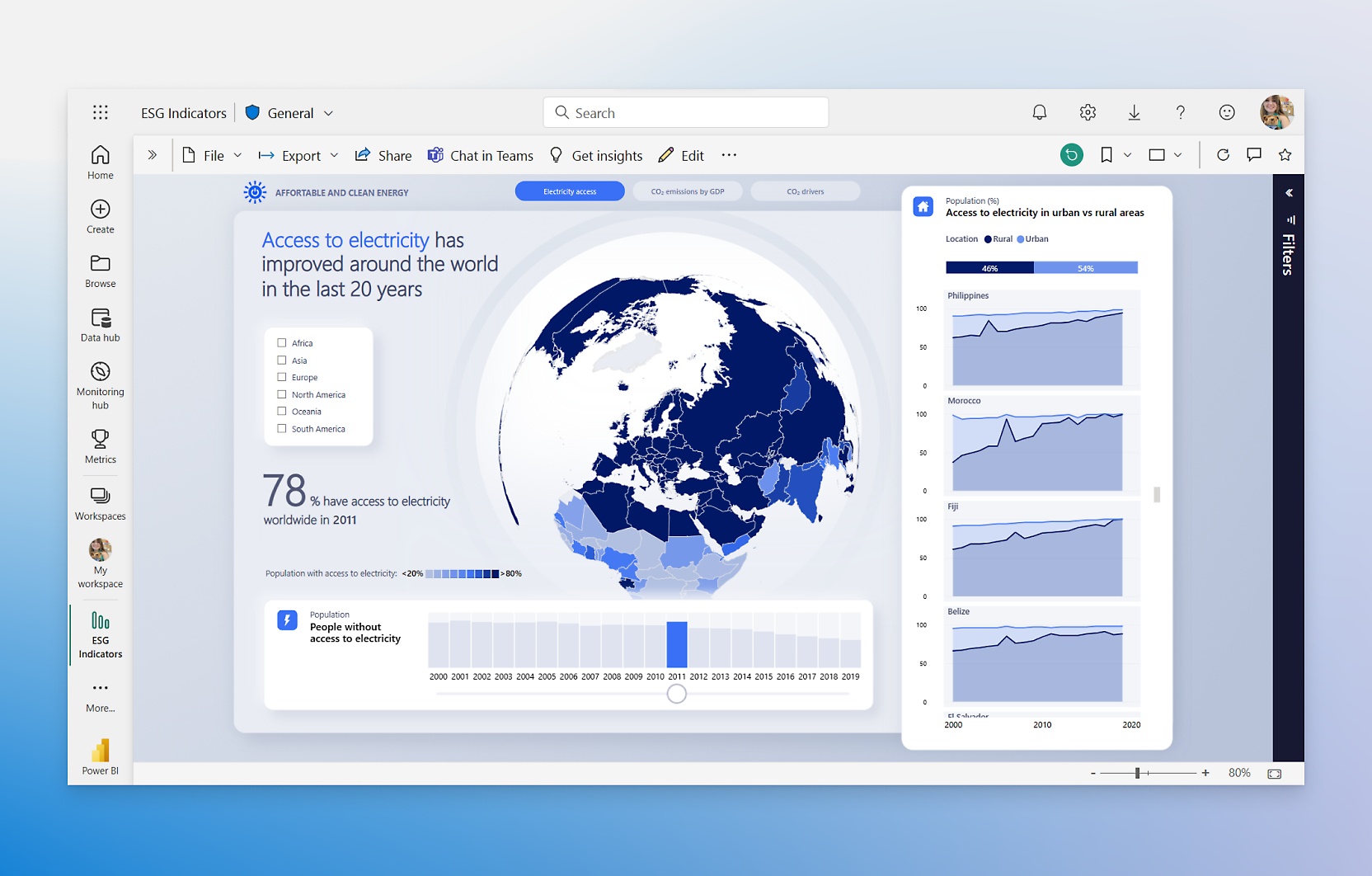

Method 2: Creating Datasets in Power BI Service

The Power BI Service (the web-based version) also allows dataset creation, though with some limitations compared to Power BI Desktop.

Direct Web-Based Creation: You can upload Excel files directly to the Power BI Service and create datasets from them. This approach works well for simpler scenarios where extensive data transformation isn’t required.

Dataflows for Reusable Data Preparation: Power BI dataflows allow you to create reusable data preparation logic that can be shared across multiple datasets. This is particularly valuable in enterprise environments where multiple teams need access to similarly processed data.

Method 3: Programmatic Dataset Creation

For organizations with specific technical requirements, Power BI supports programmatic dataset creation through REST APIs and PowerShell modules. This approach is typically used for automated data pipeline scenarios.

Essential Data Modeling Concepts

Building Relationships Between Tables

Once your data is loaded and cleaned, the next crucial step is establishing relationships between different tables. This is what transforms separate tables into a cohesive data model.

Types of Relationships:

- One-to-Many: Most common relationship type (e.g., one customer can have many orders)

- One-to-One: Less common but useful for splitting large tables

- Many-to-Many: Complex relationships that require careful handling

Best Practices for Relationships: Power BI automatically detects some relationships, but you should always review and optimize them manually. Look for common fields between tables that can serve as connecting points.

Create a clear hierarchy in your data model. Typically, you’ll have dimension tables (containing descriptive information like customer details, product information) and fact tables (containing measurable events like sales transactions, website visits).

Creating Calculated Columns vs Measures

Understanding the difference between calculated columns and measures is fundamental to effective Power BI dataset creation.

Calculated Columns: These are computed at the row level and stored in the dataset. They’re useful for categorization, grouping, and creating new descriptive fields.

Example: Creating a “Season” column based on date information

Season =

IF(

MONTH(Sales[Date]) IN {12, 1, 2}, “Winter”,

IF(

MONTH(Sales[Date]) IN {3, 4, 5}, “Spring”,

IF(

MONTH(Sales[Date]) IN {6, 7, 8}, “Summer”,

“Fall”

)

)

)

Measures: These are calculated dynamically based on the current filter context and are ideal for aggregations and business metrics.

Example: Creating a Total Sales measure

Total Sales = SUM(Sales[Amount])

Data Type Optimization for Performance

Choosing the right data types isn’t just about correctness; it significantly impacts performance and dataset size.

| Data Type | Use Case | Performance Impact |

| Whole Number | IDs, counts, quantities | Excellent |

| Decimal Number | Currency, percentages | Good |

| Text | Names, descriptions | Moderate |

| Date/Time | Timestamps, dates | Good |

| True/False | Status flags, categories | Excellent |

Memory Optimization Tips:

- Use whole numbers instead of decimal numbers when possible

- Avoid unnecessarily long text fields

- Consider using numeric codes instead of text for categories with many repeated values

- Remove time components from date fields if not needed

Advanced Dataset Creation Techniques

Implementing Row-Level Security (RLS)

For datasets that will be shared across different user groups, implementing row-level security ensures users only see data relevant to their role or department.

Setting Up RLS: Create roles in Power BI Desktop by defining DAX filter expressions that limit data access based on user attributes.

Example: Restricting sales data by region

[Region] = USERNAME()

This approach requires that usernames correspond to region names, or you can create a more sophisticated lookup system using user tables.

Creating Composite Models

For complex scenarios involving both imported data and DirectQuery sources, composite models allow you to combine different data connectivity modes within a single dataset.

This is particularly useful when you have large transaction tables that should remain in DirectQuery mode for real-time access, combined with smaller reference tables that can be imported for better performance.

Implementing Incremental Refresh

For large datasets that grow over time, incremental refresh allows you to refresh only new or changed data rather than the entire dataset.

Setting Up Incremental Refresh: Define date/time parameters that Power BI uses to determine which data to refresh. This dramatically reduces refresh times and resource consumption for large datasets.

Configuration Steps:

- Create RangeStart and RangeEnd parameters

- Filter your main table using these parameters

- Configure the incremental refresh policy in Power BI Service

Data Source Integration Strategies

Working with Multiple Data Sources

Modern business intelligence often requires combining data from various sources. Power BI excels at this, but it requires thoughtful planning.

Common Integration Scenarios:

- Combining CRM data with financial systems

- Integrating web analytics with sales data

- Merging survey responses with customer databases

- Connecting social media metrics with marketing campaign data

Best Practices for Multi-Source Integration: Establish a common key structure across all data sources before attempting integration. This might require creating mapping tables or standardizing identifier formats.

Consider data refresh schedules and dependencies. Some data sources update hourly, while others might only update daily or weekly. Plan your refresh strategy accordingly.

Handling Different Data Refresh Requirements

Different business scenarios require different refresh strategies:

Real-Time Analysis: Use DirectQuery for data that must always show the most current values.

Daily Business Reporting: Schedule automatic refreshes during off-hours.

Historical Analysis: Import data for better performance since real-time updates aren’t critical

Performance Optimization Strategies

Query Folding and Data Source Optimization

Query folding is a Power BI feature that pushes data transformation operations back to the source database rather than performing them in Power BI. This can dramatically improve performance.

Operations That Support Query Folding:

- Basic filtering and sorting

- Column selection and removal

- Simple aggregations

- Join operations

Operations That Break Query Folding:

- Complex custom functions

- Certain data type conversions

- Advanced text manipulation

Monitor the Query Diagnostics in Power Query Editor to ensure your transformations are being folded back to the source when possible.

Memory and Storage Management

Dataset Size Optimization:

- Remove unnecessary columns early in the transformation process

- Use appropriate data types to minimize memory usage

- Consider aggregating detailed transactional data if row-level detail isn’t needed

- Implement date tables efficiently rather than using auto-generated date hierarchies

Compression Strategies: Power BI uses advanced compression algorithms, but you can help by:

- Reducing cardinality in text columns through categorization

- Avoiding calculated columns when measures would work equally well

- Using integer encoding for repetitive text values

Testing and Validation Procedures

Data Quality Checks

Before deploying your dataset, implement comprehensive quality checks:

Completeness Validation:

- Verify that all expected data sources are connected and loading

- Check for missing values in critical fields

- Ensure date ranges are complete and logical

Accuracy Testing:

- Cross-reference calculated measures with known values from source systems

- Test edge cases and boundary conditions

- Validate that relationships are working correctly by spot-checking aggregations

Performance Testing:

- Test report performance with the full dataset

- Verify refresh times meet business requirements

- Check memory usage and resource consumption

User Acceptance Testing

Stakeholder Review Process: Present the dataset structure and key measures to business stakeholders before full deployment. This helps identify missing business logic or calculation errors early.

Documentation Creation: Document all calculated measures, business rules, and data transformation logic. This documentation is crucial for maintenance and troubleshooting.

Common Pitfalls and How to Avoid Them

Data Modeling Mistakes

Circular Relationships: These occur when you create a chain of relationships that loops back on itself. Power BI will warn you about these, and they should be resolved by restructuring your data model or using alternative calculation approaches.

Many-to-Many Relationships Without Proper Context: While Power BI handles many-to-many relationships better than previous versions, they can still cause unexpected results if not properly understood and tested.

Over-Normalization: While normalized data structures are good for operational databases, analytical models often benefit from some denormalization for better performance and easier report creation.

Performance Issues

Importing Too Much Historical Data: Be realistic about how much historical data your reports actually need. Often, 2-3 years of detailed data plus summarized historical trends is sufficient.

Complex Calculated Columns: Overuse of complex calculated columns can significantly impact performance. Consider whether the same logic could be implemented as measures or moved to the data source.

Inefficient DAX Expressions: Poor DAX coding practices can create performance bottlenecks. Learn to write efficient DAX by understanding evaluation context and avoiding row-by-row calculations where possible.

Security and Governance Considerations

Data Privacy and Compliance

Sensitive Data Handling: Identify and properly handle personally identifiable information (PII) and other sensitive data. This might involve masking, encryption, or exclusion from the dataset entirely.

Compliance Requirements: Ensure your dataset creation process complies with relevant regulations like GDPR, HIPAA, or industry-specific requirements.

Access Control and Sharing

Workspace Security: Organize datasets within appropriate Power BI workspaces with proper access controls. Consider the principle of least privilege when assigning permissions.

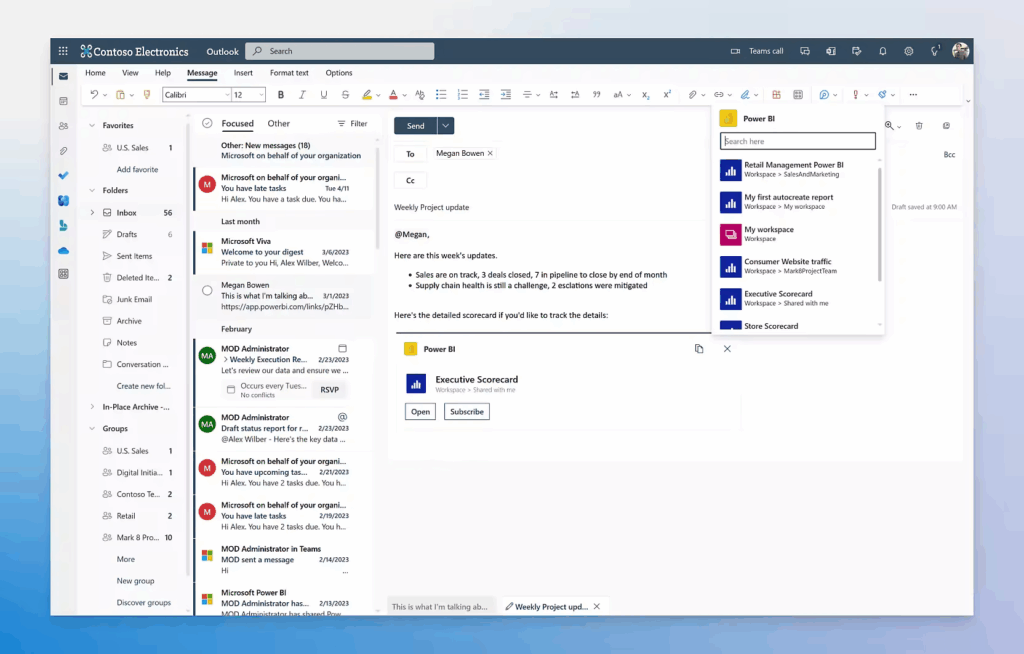

App Distribution vs Direct Sharing: For broader organizational distribution, consider packaging datasets and reports into Power BI apps rather than sharing individual artifacts.

Future-Proofing Your Dataset Strategy

Scalability Planning

Design your datasets with growth in mind. Consider how your data model will handle:

- Increased data volume over time

- Additional data sources and integration requirements

- Growing numbers of users and concurrent access

- Expanding analytical requirements

Technology Evolution

Stay informed about new Power BI features and capabilities that might improve your dataset design:

- Enhanced AI and machine learning integration

- Improved cloud connectivity options

- Advanced security and governance features

- Performance optimization tools

Conclusion: Building Datasets That Drive Business Value

Creating effective Power BI datasets is both an art and a science. It requires technical skills in data transformation and modeling, but also business acumen to understand what insights will be most valuable to your organization.

The investment you make in properly designing and building your datasets pays dividends throughout the life of your Power BI implementation. Well-structured datasets make report creation faster, ensure consistent business logic across all analyses, and provide the flexibility to answer new business questions as they arise.

Remember that dataset creation is an iterative process. Your first version doesn’t need to be perfect; it needs to be functional and provide immediate value. You can continuously improve and expand your datasets as you learn more about your organization’s analytical needs and as new data sources become available.

The key is to start with solid fundamentals: clean data, proper relationships, efficient data types, and clear documentation. Build these habits into your dataset creation process, and you’ll create Power BI solutions that truly transform how your organization uses data to make decisions.

Whether you’re analyzing sales trends, monitoring operational performance, or exploring customer behavior patterns, the quality of your insights depends entirely on the quality of your underlying dataset. Invest the time to do it right, and your Power BI implementation will deliver value far beyond what you initially imagined possible.